Google recently announced via a blog post that its Google Lens technology, which has been on the market for a year, can now detect over a billion items. When the artificial intelligence camera feature launched, it could only recognize 250,000 items.

Google Lens’s algorithm was fed information via hundreds of millions of queries in Image Search to provide the basis for training its algorithms. It also gathers data from photos captured by smartphones and can expand its product recognition abilities via Google Shopping. While the database of items that Google Lens can now recognize is massive, for now, it will most likely fail to recognize rare and obscure items such as vintage cars or stereo systems from the 70s.

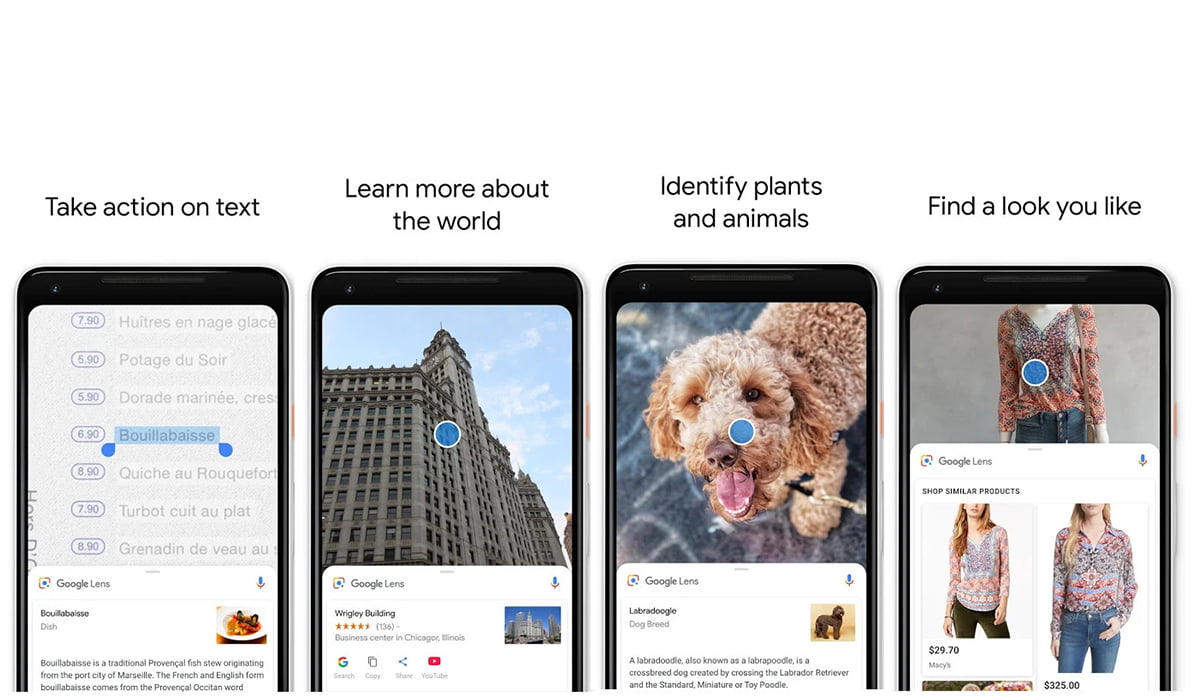

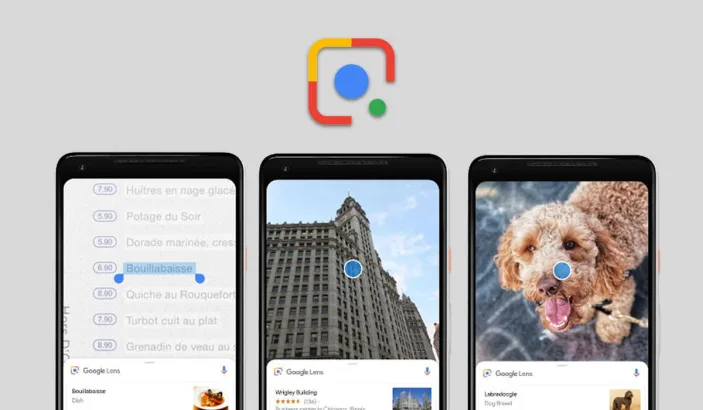

Google Lens has a lot of practical applications. It can turn one’s images into search queries to bring up all relevant information about the image via its machine learning and artificial intelligence algorithm.

Lens also uses TensorFlow which is Google’s open source machine learning framework. TensorFlow helps connect images to the words that best describe them. For example, if a user snaps a picture of his friend’s PlayStation 4, Google Lens will connect the images to the words PlayStation 4 and gaming console.

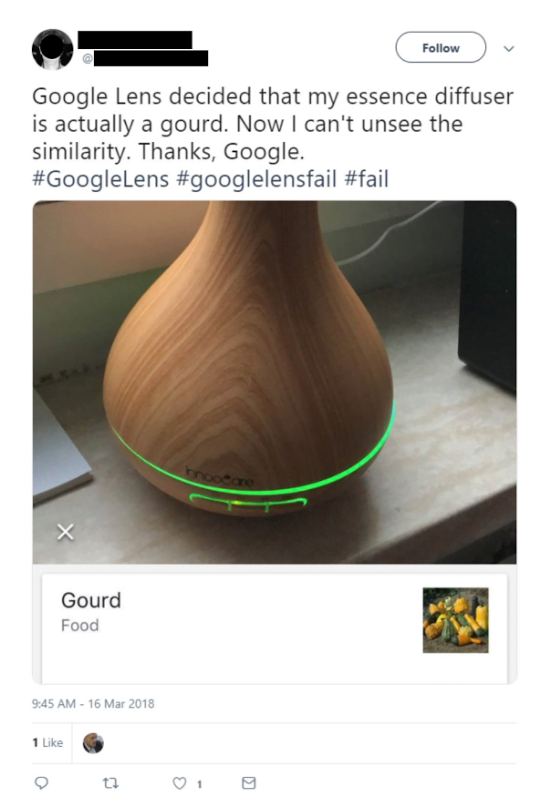

The algorithms then connect those labels to Google’s Knowledge Graph, with its tens of billions of facts on Sony PlayStation 4. This helps the system learn that the PS4 is a gaming console. Obviously, it isn’t perfect. It can get confused by a similar looking object.

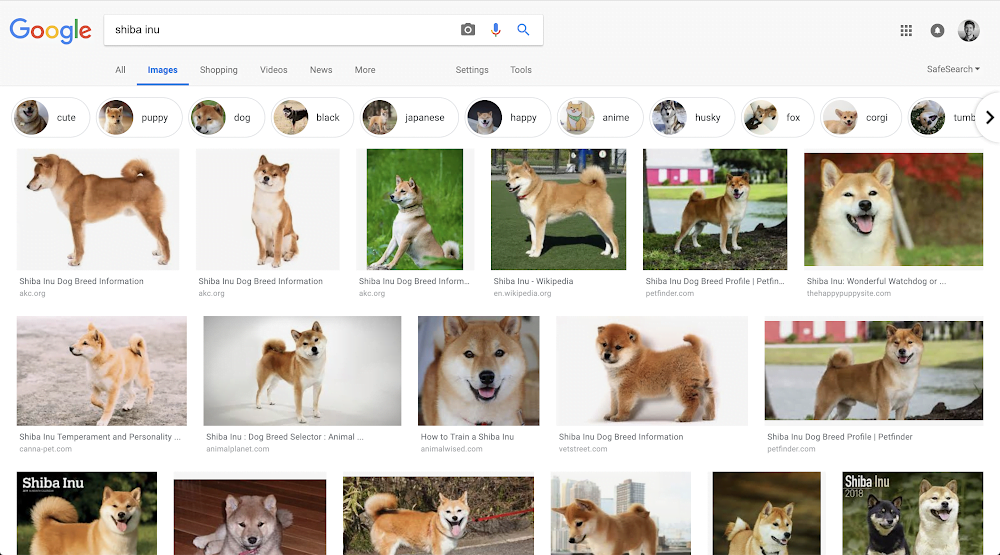

Lens brings in query specific images along with the thousands of images that are returned for each search query. These relevant images help train the algorithms to produce even more accurate results. Take this Shiba Inu search, for example:

Google’s Knowledge Graph, helps bring up tens of billions of facts on a variety of topics ranging from “pop stars to puppy breeds.” This is what tells the system that a Shiba Inu is a dog breed and not, let’s say, a smartphone brand.

It is often easy to fool the system. We take photos from different angles, in different backgrounds, or varying lighting conditions. All these factors can produce photos that differ from the database available to Google Lens.

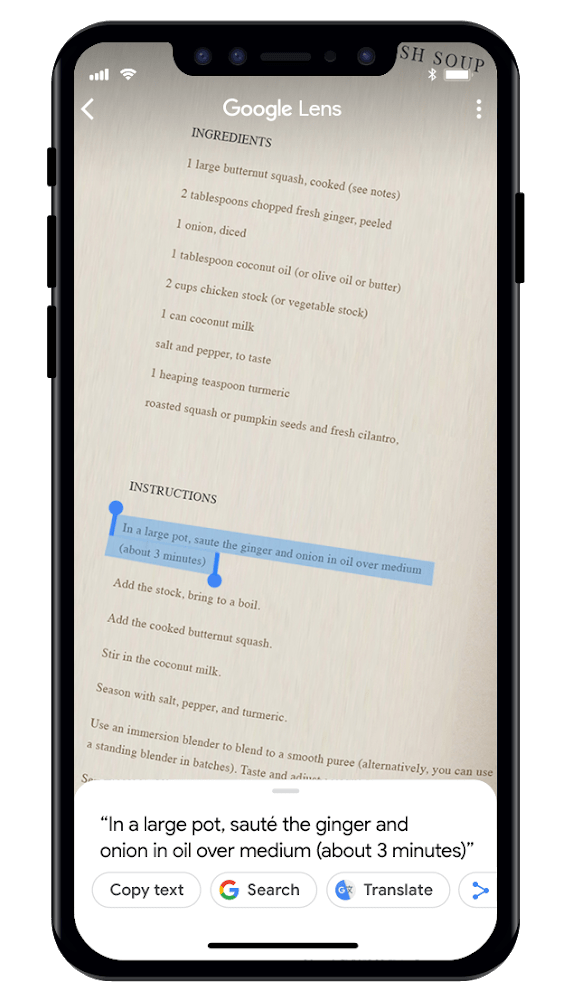

There’s still work to be done to make Lens smarter. This is why the team at Google are expanding Lens’ database by feeding it pictures taken via smartphones. Google Lens also can read text off of menus, or books.

The lens makes these words interactive so that users can perform various actions on them. For example, users can point their smartphone’s camera at a business card and add it to their contact list. Users can also save ingredients from a physical recipe book and import them into their shopping list.

Google taught the Lens how to read in a very bare-bones manner. Google developed an optical character recognition (OCR) engine. The company combined it with their understanding of how languages work from Google Search and the Knowledge Graph. It taught the machine learning algorithms via different characters, languages, and fonts, and drew inspiration from sources such as Google Books scans.

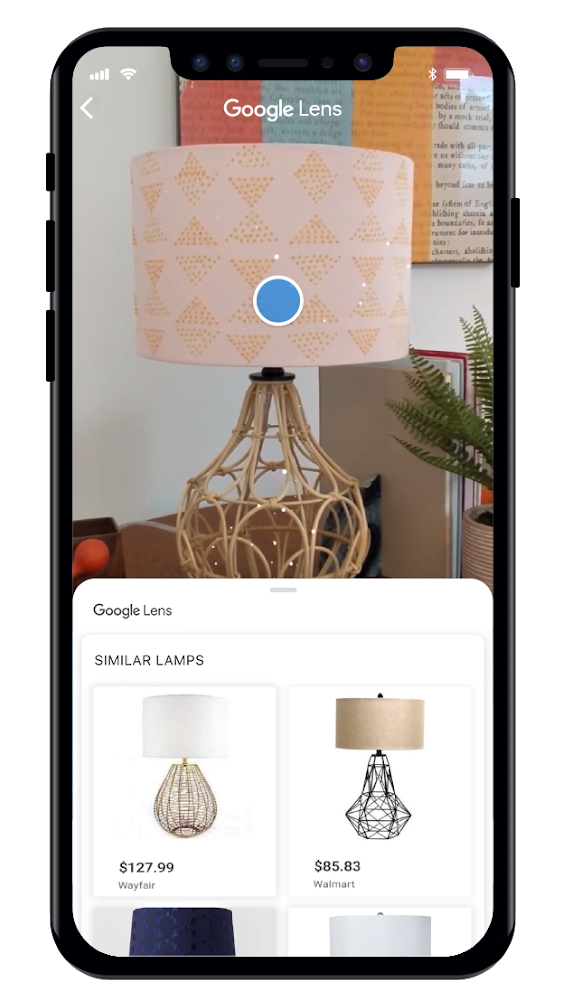

Lens also has a style search feature that gives suggestions for stylistically similar items. So if a user points their camera at outfits and home decor, they will be sent suggestions of items that are similar in style. So, for example, if a user sees a lamp that they really find interesting, Google Lens will give suggestions of lamps that have similar designs for sale. Lens will also display product reviews of stylistically relevant products.

Our smartphones are becoming smarter with each passing day. We rely so much on them because of their portability and overall ease of use. This is what Google realized long ago. It is focusing heavily on improving its smartphone-based services. Google Lens is just one example of this.

The deep learning algorithms used in Google Lens might also soon be used for medical diagnoses. They show great potential in detecting signs of diabetic retinopathy just by looking at photos of eyes.

For now, Lens can only work on smartphones, but we see it becoming a ubiquitous tech tool in the near future.

Share Your Thoughts