Ever wonder how those fake news-detecting algorithms actually work? What, exactly, do they look for and how do they determine the context of a news story in order to classify it?

Well, a research team at MIT wondered the same thing and they built a machine learning model that assesses the linguistic hints that indicate whether or not something is “fake news.”

One of the problems with so many of the algorithms that govern our lives is that they are sold by companies who claim that their inner workings are intellectual property and so refuse to share the details of how the models work with those who use them. But the MIT scientists aimed to make their model as transparent as possible.

In a paper presented at the Conference and Workshop on Neural Information Processing Systems, the researchers claimed their model could help discover the ways in which algorithms can adapt to analyze newer news topics as well (that is, on issues that include new language the systems weren’t initially trained to assess).

Most of the fake news detectors being used now, they claim, “classify articles based on text combined with source information, such as a Wikipedia page or website,” which may not be as reliable when assessing new topics.

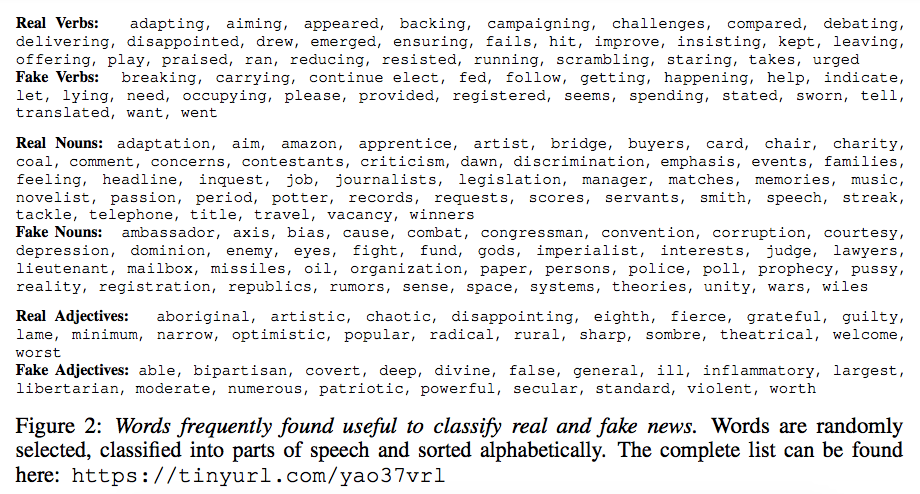

A press release on the research states that the team developed a deep learning model that works solely by classifying language patterns “which more closely represent a real-world application for news readers.” Their model searchers for words that appear frequently in both types of news to help in the classification – in the case of fake news, it identifies exaggerations and superlatives, for example, to help make an initial classification.

You can find the complete list of words they found useful in classifying real and fake news on GitHub by clicking here, but here is a sample:

“In our case, we wanted to understand what was the decision-process of the classifier based only on language, as this can provide insights on what is the language of fake news,” says co-author Xavier Boix, a postdoc at MIT’s Center for Brains, Minds, and Machines (CBMM).

In an effort to build a more reliable and transparent model, researchers used a convolutional neural network, which is often used to analyze visual images. These deep learning networks can learn filters that would have previously been hand-coded by engineers. This assures a certain amount of independence and seeks to reduce bias in the system.

The researchers also needed to be careful not to train the system on solely political news. Their final product was trained on a set of articles that did not include the word “Trump.”

Instead of searching for individual words, their model analyzed triplets of words. The model saw the triplets as patterns that provided more context.

The researchers trained the model on a fake news dataset called Kaggle, which contains around 12,000 fake news pieces gathered from 244 different websites and then compiled their own dataset of real news samples with roughly 2,000 hand-picked articles from the New York Times and over 9,000 from The Guardian. The model worked with 87% accuracy (93% when they did an initial test including the word “Trump”).

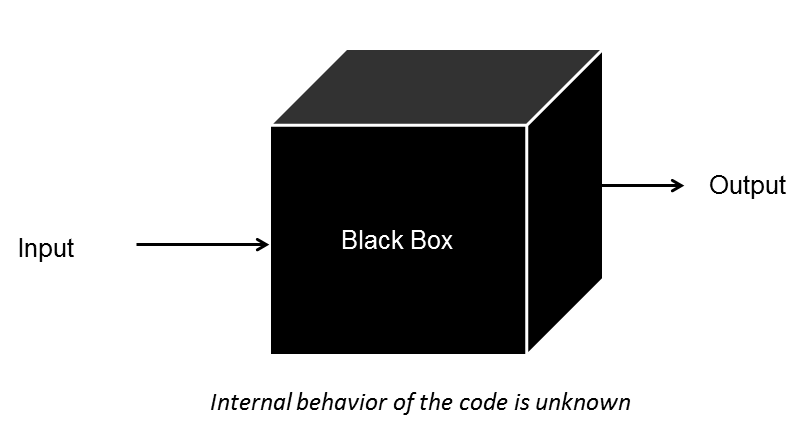

Because these models are often a black box even to those who create them, it’s the job of scientists to then “open them up” to determine how the algorithms operate. This team was able to pull out the instances in which the model made a prediction and track down the exact words it used in each article. This alone could make the model useful to readers if it were able to highlight potentially problematic phrases that citizens could then use to assess the trustworthiness of the source.

Boix suggested that:

“If I just give you an article, and highlight those patterns in the article as you’re reading, you could assess if the article is more or less fake. It would be kind of like a warning to say, ‘Hey, maybe there is something strange here.'”

You can even test out their fake news pattern detector by clicking here.

For now, the researchers are still working on the model and determining how to make it more useful for readers as well as intermediary automated fact-checkers. But they did suggest that it could be the basis of an app or browser extension in the future.

“Fake news is a threat for democracy,” Boix says. “In our lab, our objective isn’t just to push science forward, but also to use technologies to help society. … It would be powerful to have tools for users or companies that could provide an assessment of whether news is fake or not.”

Share Your Thoughts