Associating political correctness with Facebook is not something new. While the platform has been trying to tackle hate speech for quite a long time, it has failed miserably, and there are multiple instances where it shied away from its responsibility, including its failure to address online hate speech related to violence in Myanmar.

It wouldn’t be wrong to say that Facebook guides public debates in certain directions. Especially when it comes to elections, many people feel that Facebook is biased.

Every other week, a small group of workers discusses its content regulation policies in Menlo Park, California. A proposed “Rulebook” meant for the regulators leaked. We are glad that it did.

While the bias didn’t surprise us, the way the content is proposed did.

Here are a few of the slides from the presentation of the rulebook that the New York Times got their hands on:

Controlling other countries’ politics

Meddling in other countries’ politics is Mr. Zuckerberg’s leisure pursuit, or is it not?

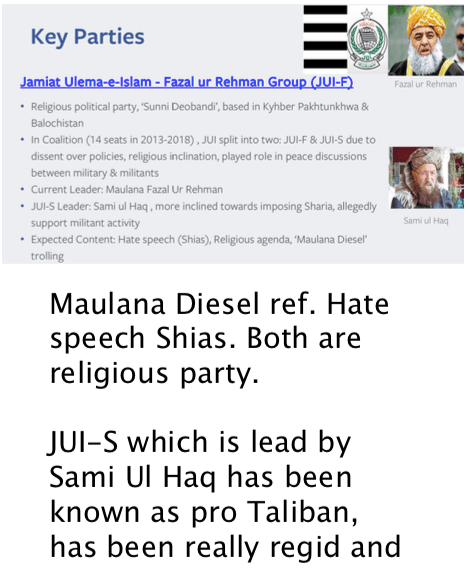

This slide explained how the moderators should look out for certain terms that Facebook identifies as “derogatory.” It is a fact that a few of the political groups are known to be religious fundamentalists in Pakistan. So the posts mentioning/supporting these groups are to be strictly monitored. But what’s intriguing is the information revealed by The Times from internal emails allowing posts that praise the “Taliban,” a religiously motivated extremist group demonized globally.

Likewise, names as harmless as “Maulana Diesel” are barred. What Facebook needs to understand here is the fact that it can never fully understand the sensitivity of region-centric content, especially when it relates to politics.

If you are in Pakistan, the electronic media won’t show you the disturbances happening across the country on Election Day. With more than half of the population on Facebook, the platform serves as a leading news agency on such an important day. Propaganda on Facebook will spread like fire in such crucial times. Imagine your views being manufactured by social media on Election Day.

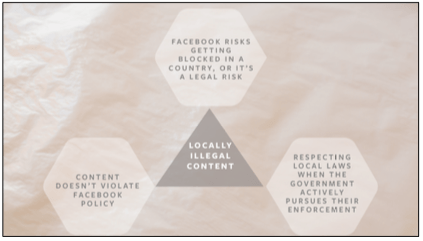

The above image tells us that the platform fears getting blocked in different regions and therefore respects certain limitations laid down by different countries. Although the platform pushes things slightly over the edge, it tends to hold itself back when it comes to its reputation – such hypocrisy.

The social media platform recently banned a far-right Pro-Trump group, called Proud Boys in the United States. It even blocked the individual accounts associated with it. So there are many instances when the platform just could not help but poke its nose in public debates.

However, it remained silent for several days when a Sudanese teen bride was auctioned off on the platform. The young girl was sold to a businessman after a week-long bidding war. What does that tell you about the platform that claims to celebrate inclusivity making sure nobody gets offended in any way? That’s what I thought!

Keeping nationalism in check

Words and phrases can mean different things across cultures. If “tell me something I don’t know” was your reaction, then I’m sure this one will make you ponder why still so many people use Facebook.

The moderators at Facebook rely on Google Translate to regulate content in different areas of the world. To make matters more complicated, the moderators do not even have updated information about the crisis they are discussing.

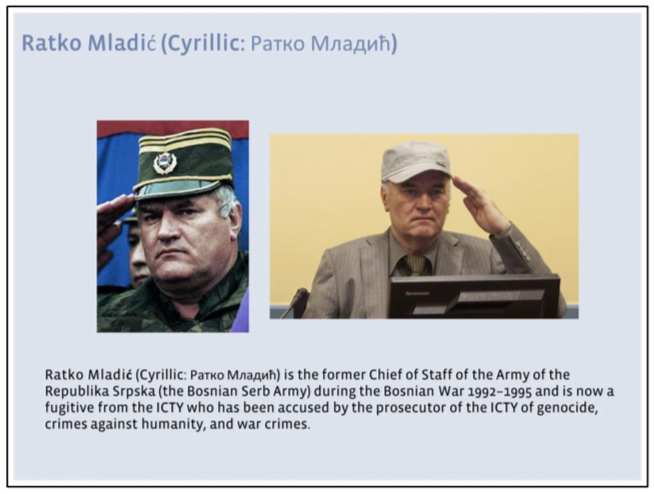

On explaining the rise of nationalism in the Balkan region, the slide that the team came up with painted a picture of true recklessness on the social media platform’s part.

This slide shows a Balkan war criminal. The information on the slide will have you believe that he’s still a fugitive whereas he was arrested back in 2011; the height of misinformation. In another slide, Ratko Mladic’s name was replaced with Rodney Young.

Reading between the lines

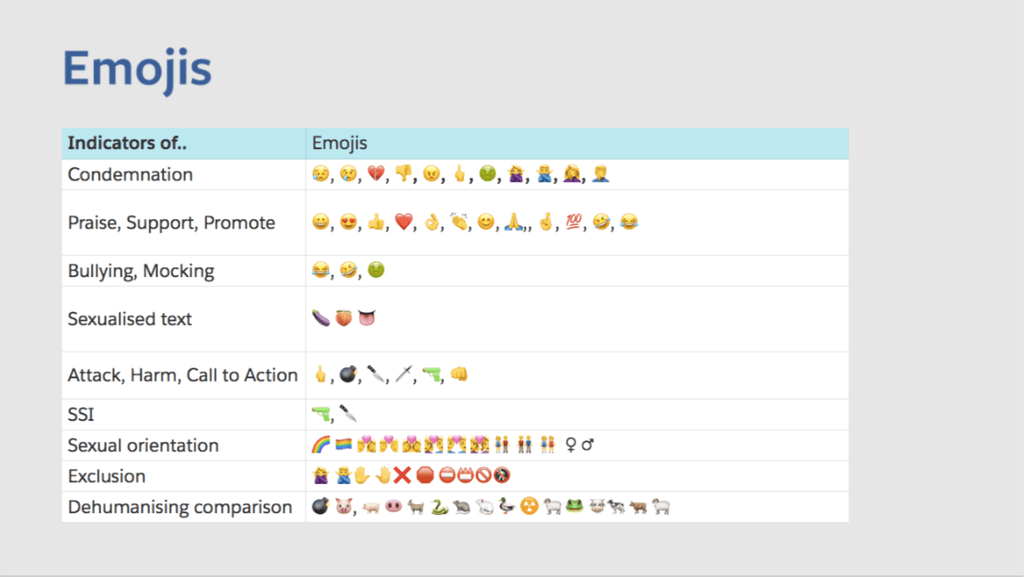

A platform that is known to have incited violence in Myanmar is now trying to read between the lines to make sure that nothing harmful gets posted. From sexual innuendos to potential terror-centric emojis, Big Brother has it figured out.

The moderators are instructed to follow these emoji guidelines and blindfold their subjective eye. How thoughtful of Facebook.

Monika Bickert, Facebook’s head of global policy management, said:

“There’s a real tension here between wanting to have nuances to account for every situation, and wanting to have a set of policies we can enforce accurately and we can explain clearly,”

Another slide set out guidelines for words to watch out for.

The guidelines identified words like “Mujahideen,” which translates to a martyr in English, as pro-terrorist. It is clear how Facebook is deciding on the connotations attached to the word “Mujahideen.” This is offensive for so many people, and the platform fails to realize this.

The effort to counter online hate is always appreciated, but not when it comes at the cost of unchecked intrusion. Facebook has been accused of misinformation and inciting hate in vulnerable groups multiple times.

If this is how it is going to be then Facebook ought to rethink this. What do you think?

Share Your Thoughts