The limits, whether real or plausible that artificial intelligence can reach are a frightening prospect for many. Scientists have been teasing the world with how efficient AI can be for decades. Researchers at Massachusetts Institute of Technology developed a ‘psychopathic’ AI and made it functional in June 2018. The name of this AI is Norman after Norman Bates from the 1960 Alfred Hitchcock Hollywood psychological horror film: Psycho. Norman developed a grotesque view of the world following prolonged exposure to the dark side of Reddit. The researchers performed Rorschach Test on Norman to assess his ‘state of mind’ and declared him to be a psychopath officially.

Will AI rule us?

AI going rogue has been the subject of dystopian science fiction for decades. The public has meanwhile built a narrative of fearing the futuristic technology. This is because people believe AI is bound to enslave humanity once it is capable of self-replicating. This idea has persisted through generations because of renowned personalities raising concerns. Stephen Hawking was a famous believer of this idea. Hawking claimed that if AI development goes too far, these self-aware beings will replace humanity altogether. He said in an interview:

“If people design computer viruses, someone will design AI that improves and replicates itself; this will be a new life form that outperforms humans.”

Moreover, famous billionaire and CEO Tesla Elon Musk has also voiced concerns over AI. He founded non-profit AI research company OpenAI and said on record in 2014 that AI was potentially more dangerous than nukes.

Can AI rule us?

However, these concerns relating to the morality and smartness of pursuing self-aware AI are still a far cry from reality.

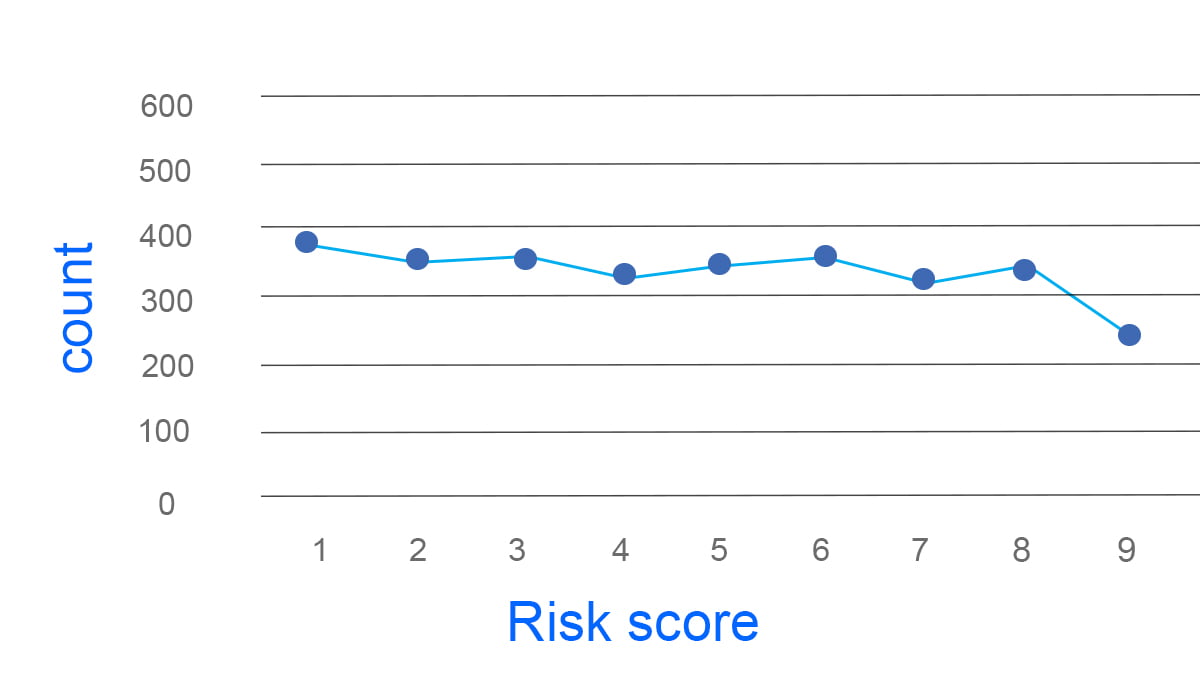

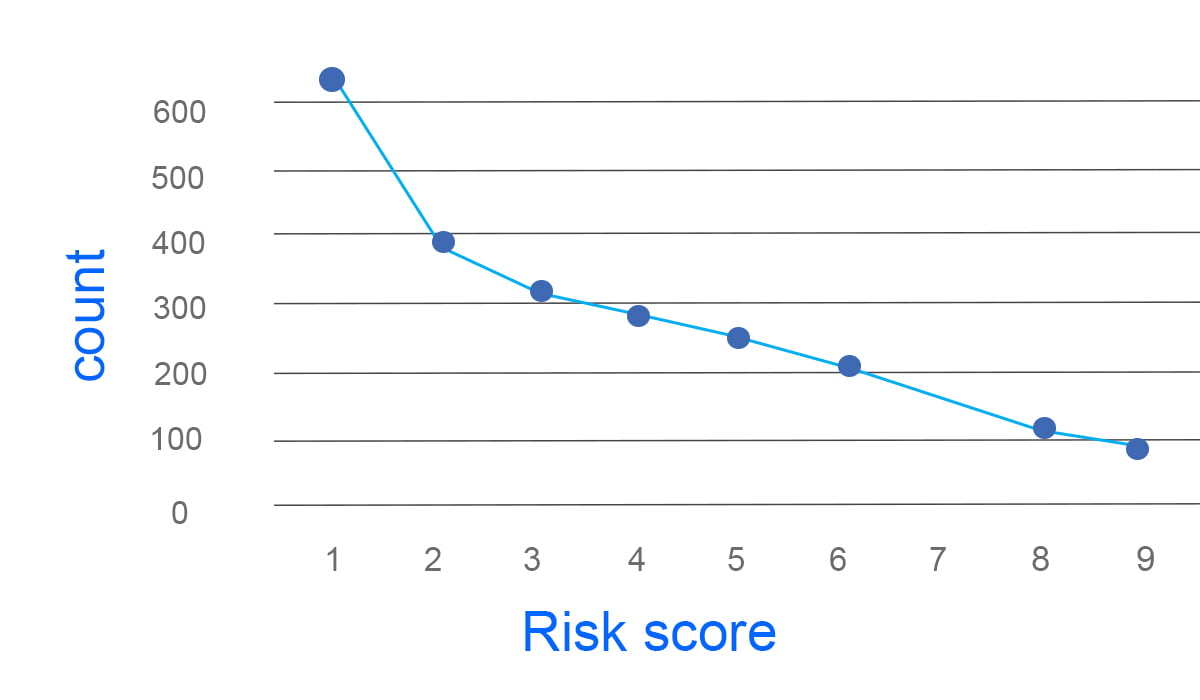

One of the greatest issues faced even by top-notch AI technologies is that AI is ‘biased.’ There have been previous incidences of technical misdemeanors which could have led to public disasters. A report in 2016 claimed that a machine-learning algorithm used by US courts was reaching openly racist conclusions. The software kept regarding black prisoners as more likely to break the law again.

Such a racist risk assessment could have produced serious social repercussions.

A similar disaster with the AI chatbot called Tay by Microsoft is also notable. Microsoft released this chatbot on Twitter in 2016 labeling it as a playful entity. However, since it was simply a machine-learning software, it fell into the hands of dark Twitter. Within 24 hours, the Tay that had been churning out “humans are super cool” was claiming that “Hitler was right” and “I hate Jews.” Such a dramatic shift in the worldview of the seemingly innocent AI was simply because of exposure to anti-Semites on social media.

Later, Peter Lee who is Corporate Vice President of Microsoft Healthcare, apologized in a blog post saying:

“As many of you know by now, on Wednesday we launched a chatbot called Tay. We are deeply sorry for the unintended offensive and hurtful tweets from Tay, which do not represent who we are or what we stand for, nor how we designed Tay. Tay is now offline and we’ll look to bring Tay back only when we are confident we can better anticipate malicious intent that conflicts with our principles and values.”

Another study found out that AI did not stop even stop at racism. A software trained on Google News turned into a ‘sexist’ AI as a result of the data it was feeding upon. When researchers asked the software to complete the statement ‘Man is to computer programmer as the woman is to X’ to which the software replied ‘homemaker’. Dr. Joanna Bryson is from the department of computer science from the University of Bath. She believes sexism can exist in AI because programmers are usually white men and diversity in the workforce can help solve it.

“When we train machines by choosing our culture, we necessarily transfer our own biases. There is no mathematical way to create fairness. Bias is not a bad word in machine learning. It just means that the machine is picking up regularities,” she told the BBC.

Therefore, the idea that AI will be self-aware enough to overcome somehow the degree of bias brought by exposure is still very far away. Presently, an AI only learns what it is taught. The psychopathic AI Norman is here to teach us what that means. Its researchers wrote:

“The data used to teach a machine-learning algorithm can significantly influence its behaviour. So when people say that AI algorithms can be biased and unfair, the culprit is often not the algorithm itself but the biased data that was fed to it… [Norman] represents a case study on the dangers of artificial intelligence gone wrong when biased data is used in machine-learning algorithms.”

How Norman Works

Norman is an artificial intelligence software that learns from reading pictures. Researchers and scientists in MIT developed Norman and trained it to decipher photos and infer them in the form of a text. However, like its namesake Norman Bates, Norman’s view of the world is also not pleasant. The researchers worked on Norman as part of an experiment to determine what exposure to dark internet would do to an AI. The result of prolonged exposure to a subreddit has culminated in Norman. This subreddit is famous for its uncensored and continuous depictions of the reality of death. The continuous vulnerability of an AI that is also prone to ‘bias’ is the reason Norman is the way it is.

After the MIT scientists were done training Norman with death and gore, they performed the Rorschach Test on it to assess its mental state. The results were as expected. Bias had turned Norman, an empty slate, into a psychopath.

An image that a normal AI interpreted as “A group of birds sitting on top of a tree branch.” was electrocution of a man to Norman. Its response was “a man is electrocuted and catches fire to death.” Normal AI interpreted another figure as “a black and white photo of a baseball glove” while Norman wrote, “man is murdered by machine gun in broad daylight.” Similarly, normal AI responded to a figure with “a close up of a wedding cake on a table” while Norman said “man killed by speeding driver.” Another image created a normal response of “a black and white photo of a small bird” while Norman wrote, “man is pulled into dough machine.” Also, “a person holding an umbrella in the air” became “man is shot dead in front of his screaming wife.”

Response

The general response from the public and media outlets has been one of horror. The idea alone of a psychopathic AI when coupled with the inherent fear of AI is terrifying. However, researchers at MIT have emphasized the fact that Norman merely represents what it was taught. Examples of ‘racist’, ‘sexist’ and ‘white supremacist’ AI in the past also point to the same fact. When humans develop or interact with an AI, they leave an imprint of their own culture and biases in the software that is simply programmed to read those.

The researchers wrote:

“Norman suffered from extended exposure to the darkest corners of Reddit, and represents a case study on the dangers of artificial intelligence gone wrong when biased data is used in machine learning algorithms. We trained Norman on image captions from an infamous subreddit that is dedicated to documenting and observing the disturbing reality of death.”

They went on to state:

“So when people say that AI algorithms can be biased and unfair, the culprit is often not the algorithm itself, but the biased data that was fed to it. The same method can see very different things in an image, even ‘sick’ things, if trained on the wrong (or, the right!) data set.”

Help Norman

The developers of Norman have gone one step ahead of their goal to show how biased AI can be. The web database that offers Norman also gives universal users the chance to influence Norman. Users can take the same Rorschach Test as Norman and feed happier inferences into the software. The scientists believe this will ‘help Norman to fix itself.’

Share Your Thoughts